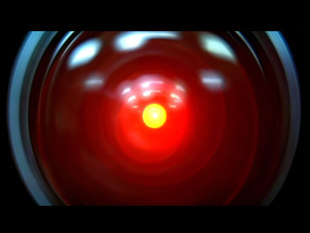

Now, eventually are we are going to come to a point where computers are literally going to be programmed well enough to have emotions, react intelligently to situations, and tell you that they don't want to die.

What does this mean? Plenty!

In philosophy we are dealing with a set of problems called the Easy Problem and the Hard Problem of consciousness, so coined by modern age philosopher David Chalmers.

The Easy problem basically says that we will eventually be able to figure out the riddle of our experience of consciousness (the feeling that you are you and are in control of the things you do through free will) through the mapping and understanding of the vast array of distributed processes in the brain, which gives rise to our so-called faux experience of consciousness. This feeling of being you, according to the Easy Problem, is a trick of the perceptual system that the brain engages in so that it can cut down on the amount of information that must be handled and sorted through every time you do something like run your finger through grains of sand or have a conversation with a friend or experience something funny like the 10 million YouTube cat videos Google used to teach the neural network in the link above in order to draw the cat. To simplify, the Easy Problem hypothesizes that consciousness is reducible to physical brain activity.

The Hard Problem is much more difficult to wrap our minds around. The Hard Problem is the question of what causes the actual experience of being you. Through our imaginations, we can create whole scenarios and worlds. We have the feeling that we have some kind of executive entity that enables us to navigate our way around and make decisions about the world. The Hard Problem is asking how this soup of neurons gives rise to the subjective experience of reality that we take for granted. It is the feeling of love, the color of apples, or the excitement of riding a roller coaster. Trouble is, we don't even know what a solution would look like, and by that token we are reminded of Louis Armstrong's description of jazz; "When you got to ask what it is, you never get to know."

The reason I go through the trouble of explaining, briefly, these two problems, is because we will eventually begin to replace failing parts of our brains with replica computer chips, and further into the future, entire thinking brains will power robots that will be indistinguishable from ours. The Turing Test will eventually be passed.

We will not be able to say, until the Hard Problem is resolved, that computers do not have the same subjective experience of being alive that we do.

Implications?

Lets say your spouse commits suicide. Come to find out, before they killed themselves they were seeing a robot counselor. For the purposes of this discussion, we will go ahead and make the due diligence assumptions that the robot is a fully licensed counselor, and those licenses are legit and governed by whatever state board of examiners in which the robot resides.

Now, just like a human, the robot counselor has represented itself to be an effective counselor with good results.

You subpoena the case notes of the robot counselor, and come to find out that your spouse disclosed to the counselor that they were having thoughts of suicide. No plan or intent was mentioned, but your spouse pleaded with the robot counselor to not tell anyone, and the robot counselor, in the moment, believed that if it did disclose this information to the police (which is an option a licensed counselor has in most states in order for the police to do wellness checks) that the symptomatology would only be exacerbated and risk endangering the client to more traumatic emotions increasing the chance of suicide.

Given that your spouse is dead, you believe the robot counselor to be in the wrong and bring wrongful death lawsuit against the robot counselor.

If the Hard Problem of consciousness is not resolved, we will not be able to say with any measure of veracity that the robot counselor doesn't have the same subjective experience of being alive than the robot counselor's human counterpart, and thus the robot counselor can be held liable for damages rendered due to their mistakes. Who are we to say that this entity, this conglomeration of silicone, when telling us that it is actually conscious, isn't?

Computers should be able to represent that they are capable providers, and if they mess up, just the same as humans, they must be held liable given that we are not able to adequately say that they don't have the same subjective experience of being alive, therefore if the robot counselor makes an error in judgement, we won't be able to say for sure that the robot computer wasn't negligent in their duties as a licensed counselor.

In addition, given that the robot counselor represented itself as a good counselor, the robot counselor will have opened itself up to the scrutiny of its qualifications, as well as its claims of being a good counselor who gets good results. Same as a human.

It would be the same as if the robot malfunctioned and went offline during a counseling session (and did not previously disclose that there was the possibility of going offline) as a human counselor counterpart who has narcolepsy and falls asleep during a counseling session and failed to disclose that their medical condition may cause them to suddenly and randomly fall asleep before counseling began.

Couldn't you just sue the person who made the robot?

Again, we are dealing with the matter of independence, and once the computers are able to demonstrate a certain level of automation, our society will give them greater degrees of independence and latitude with which their freedom is granted, thus creating scenarios where you may hire a robot to perform a service. You will no more be able to sue the robot's maker than you would be able to sue a human being's parents for not raising you correctly or giving you the best DNA which led to you committing some future error for which you will be sued.

Point is, any number of things can go wrong with a robot counselor, just as any number of things could go wrong with a human counselor. Until the Hard Problem is resolved, we will not be able to say for certain that the robot counselor, who tells you, "I'm really glad that you are doing better," isn't actually glad that you are doing better.

Pursuit of Happiness

You can follow us at @POHClinicBTGS

RSS Feed

RSS Feed